Falsificationism

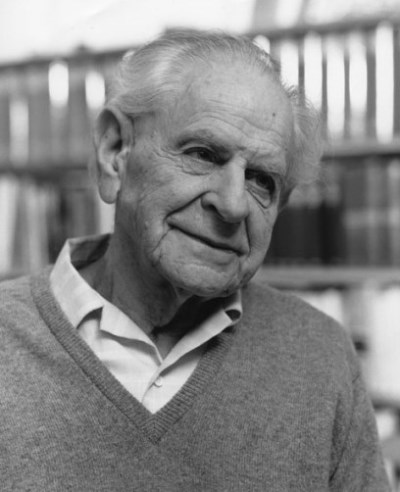

In the mid twentieth century, Austrian-British philosopher Karl Popper advocated what remains, to this day, the most influential demarcation criterion. Impressed by the problems with hypothetico-deductivism, Popper proposed that the distinction between science and pseudoscience should be drawn by focusing on the negative side of theory testing: not how theories are confirmed, but how they are refuted.

1902 - 1994

As we saw in the previous section, there are several ways in which theory confirmation seems to rely on assumptions, presuppositions, and biases, casting doubt on the hypothetico-deductivist’s claim that the objectivity of theory testing is what distinguishes genuine science from pseudoscience. All of those objections, however, addressed the confirmation side of theory testing. The refutation of a theory, in contrast, looks like an objective and strictly logical matter. Consider the following argument:

If my theory is correct, then all of its predictions hold. Not all of my theory’s predictions hold. Therefore, my theory is incorrect.

The above argument has a valid logical form known as modus tollens,For a formal definition of modus tollens, see this page of my Skillful Reasoning e-book. If you’re unfamiliar with the logic symbols, you’ll want to start reading at the beginning of the chapter on Propositional Logic. which—like all deductively valid inferences—is truth-preserving. As you may recall, this means that if both premises are true, the conclusion of the argument (i.e., the claim that my theory is incorrect) must be true as well.

By focusing on the falsification of theories rather than on confirmation, Popper hoped to rescue the objectivity and strictly logical character of science. According to Popper, the right way to conduct scientific inquiry is to construct hypotheses that yield bold conjectures—predictions that might easily turn out to be false. Genuine scientific theories can be, at least potentially, falsified in a way that is fully objective, using the hypothetico-deductive method. Thus, the distinguishing feature of genuine science, according to Popper, is simply this:

- Genuine scientific theories are falsifiable, meaning they yield testable predictions which could turn out to be false. Pseudoscientific theories, in contrast, don’t make falsifiable predictions.This is a standard way of interpreting Popper’s demarcation proposal. However, as noted in “The Fine Print” below, Popper’s view of science was more radical than the simple falsificationism widely attributed to him. For further discussion of Popper’s view, I recommend “Lecture Two: Popper and the Problem of Demarcation” in Jeffrey L. Kasser’s lecture series on The Philosophy of Science, which is included in The Great Courses collection produced by The Teaching Company. (It’s also available on Audible).

To drive home his point, Popper illustrated the distinction between falsifiable and non-falsifiable theories with many examples. Among his favorite targets for criticism were Karl Marx and Sigmund Freud, whose purportedly “scientific” theories of economics and psychoanalysis, respectively, were so elastic that almost any conceivable observation was compatible with them. Indeed, both Marx and Freud cited a wide array of observations as confirmation of their theories, but neither offered predictions specific enough to be proven false. Freudian psychoanalysis and Marxist economics were classic examples of pseudoscience.

In stark contrast to those bugbears, Einstein’s theory of gravity—general relativity—was a spectacular example of genuine, falsifiable science. Popper praised Einstein’s bold and straightforwardly testable prediction that gravity bends light, a prediction that could be checked (and potentially shown false) during a solar eclipse. In 1919, four years after Einstein proposed his theory of gravity, astronomer Arthur Eddington measured the apparent positions of stars during an eclipse and found that starlight was bent by the sun’s gravity just as Einstein said. Einstein quickly became a celebrated hero, and rightly so, according to Popper. Unlike those weaselly Freudians and Marxists, who dared not subject their theories to risky tests that might prove them wrong, genuine scientists are intellectual heroes who doggedly pursue the truth at the expense of their own hypotheses, relentlessly subjecting their ideas to tests that might refute them.

Perhaps partly because of its flattering portrayal of science as a kind of intellectual heroism, Popper’s demarcation proposal—called falsificationism—won widespread acceptance in the scientific community, and it is still taught in many science textbooks today. For example, a popular college-level textbook states:

The central property of scientific ideas is that they are testable and could be wrong, at least in principle. … As we stated above, a central aspect of the scientific method is that every scientific statement is subject to experimental or observational tests, so that it is possible to imagine an experimental result that would prove the statement wrong. … Such statements are said to be falsifiable. Statements that are not falsifiable are simply not part of science.This quotation is from an otherwise excellent textbook, which I don’t mean to denigrate in any way: James Trefil and Robert M. Hazen, The Sciences: An Integrated Approach, 9th Edition (New York: Wiley, 2023), 17.

Among philosophers of science, on the other hand, the popularity of falsificationism didn’t last long. Almost immediately, Popper’s contemporaries recognized that his demarcation criterion won’t work. Although falsifiability may be an important virtue of the best theories, it is neither necessary nor sufficient for science, because:

- Many nonscientific claims are falsifiable. For example, while dining at an Asian restaurant, a friend of mine received a fortune cookie that said “You will be hungry again in one hour.” That claim is falsifiable: just wait an hour and see if the prediction holds. Does this mean it was a scientific fortune cookie? Hardly. Similarly, many paradigmatic examples of pseudoscience, like astrology and phrenology, also make falsifiable (and falsified!) predictions. Saying demonstrably false things doesn’t automatically make you a scientist. So, falsifiability isn’t a sufficient condition for science.

- On the other hand, some scientific claims are not falsifiable. For example, the multiverse hypothesis of contemporary cosmology isn’t falsifiable in any straightforward sense. Similarly, probabilistic theories—such as those of statistical mechanics and quantum mechanics—don’t yield predictions that are falsifiable in Popper’s technical, logical sense. (Even a highly improbable outcome is, strictly speaking, compatible with the theory, so a modus tollens refutation of the theory is not possible.) Yet, all of these theories are accepted as genuine science. Thus, falsifiability isn’t a necessary condition for science either.

To be fair, Popper never argued that falsifiability all by itself is a sufficient condition for science. So, the first objection above doesn’t necessarily refute his view, but it does demonstrate that falsifiability alone won’t serve as an adequate demarcation criterion. In order to solve the demarcation problem, Popper would have to specify a set of conditions that are both necessary and sufficient for genuine science. He might try to add additional conditions which—in conjunction with falsifiability—would be jointly sufficient to render a hypothesis scientific. For example, Popper could say that a hypothesis is scientific if it is not only falsifiable but also has a certain form or logical structure. (One of Popper’s contemporaries, philosopher of science Carl Hempel, suggested that all scientific hypotheses can be formulated as universal categorical propositions—that is, claims of the form “everything in category A is in category B.”Hempel was addressing the problem of confirmation rather than the demarcation problem. For further discussion of Hempel’s view, see the section on Hempel’s Theory of Confirmation by Instances in my e-book Skillful Reasoning: An Introduction to Formal Logic and Other Tools for Careful Thought.) That may eliminate the fortune cookie counterexample, but it leads to further problems: scientific hypotheses don’t all have the same logical form. Maybe some other condition would work, but I won’t pursue the possibility here. The point is, falsifiability clearly isn’t sufficient for science, and it’s far from obvious what additional condition(s) should be added to Popper’s demarcation proposal.

Let’s turn to the second objection above: falsifiability isn’t even a necessary condition for science. How might Popper respond? Probabilistic theories could be accommodated, easily enough, by loosening the falsifiability criterion just a bit: Popper could say that a probabilistic theory is falsified when it assigns extremely low probability (below some arbitrary threshold) to a possible event that is subsequently observed. Moreover, Popper could respond to the multiverse objection by insisting that multiverse cosmology really is unscientific, so it isn’t a counterexample to the falsifiability criterion for genuine science. (In fact, Popper regarded Darwin’s principle of natural selection as unscientific for similar reasons.The principle of natural selection is Darwin’s foundational idea that nature eliminates hereditary traits that don’t promote survival and reproduction but preserves traits that do. Popper described this principle as “almost tautological.” (A tautology is a claim that cannot be false due to its logical form, such as “either X or not X” or “if Y then Y.”) The principle of natural selection can be construed as a tautology, since it says organisms that are best suited for survival and reproduction are most likely to survive and reproduce. What possible observation could ever falsify such a claim? Popper concluded that “Darwinism is not a testable scientific theory, but a metaphysical research program—a possible framework for testable scientific theories.” Karl Popper, “Darwinism as a metaphysical research program,” in M. Ruse (Ed.), But is it Science: The Philosophical Question in the Creation/Evolution Controversy (Buffalo: Prometheus, 1988), 144-155.) However, there is a deeper problem in the vicinity, and this third objection spells trouble for Popper’s portrayal of falsification as an objective, logical matter:

- No scientific hypothesis yields falsifiable predictions all by itself. Testable predictions can be deduced from a hypothesis only when you add various kinds of information to it. However, almost any hypothesis (scientific or not) can be conjoined with information that will yield falsifiable predictions in a similar way, as illustrated with examples below.

A scientific hypothesis on its own makes no predictions at all. For instance, Newton’s laws by themselves don’t allow you to predict the trajectory of an arrow fired from a bow. In order to predict the arrow’s path, you also need to know the arrow’s initial position and velocity, and you need to know the strengths of the various forces (such as gravity and air resistance) acting on it. Moreover, in order to predict what you will actually see, you need to know something about human vision, about light, and about your own position relative to the arrow.

In order to test any hypothesis, scientists must rely on a host of auxiliary theories—additional theories needed to deduce predictions from the hypothesis being tested. The role of auxiliary theories may seem trivial when dealing with mundane predictions like the expected path of an arrow. However, auxiliary theories make a big difference when deriving predictions from a typical hypothesis in physics, chemistry, or astronomy. To test the predictions of quantum field theory by running experiments in the Large Hadron Collider, for example, physicists need to consider detailed information about the system’s initial conditions, and they need to involve complicated auxiliary theories in order to predict what will be observed in the particle detectors. In particular, they need to employ numerous auxiliary theories about the workings of extraordinarily complex equipment!

So, if a hypothesis seems to give an incorrect prediction, the hypothesis itself might be wrong; but it might instead be the case that someone made a mistake about the initial conditions or auxiliary theories. Let’s call this the troubleshooting problem. (In the philosophical literature, it is called the Duhem-Quine Problem, named for two prominent thinkers who emphasized the difficulty: physicist Pierre Duhem and philosopher Willard Van Orman Quine.For further discussion of the Duhem-Quine problem, see Peter Godfrey-Smith, Theory and Reality: An Introduction to the Philosophy of Science, Second Edition (Chicago: University of Chicago Press, 2021), 41-43.) For a real-life example of the troubleshooting problem in action, consider the case of the Michelson-Morley experiment. As we saw in Chapter 6, physicists did not immediately abandon the aether theory when it yielded incorrect predictions in that famous experiment. Instead, they blamed all sorts of experimental variables and auxiliary theories.

As the troubleshooting problem reveals, theory testing is a complex process that involves many hypotheses at once. This makes “falsifiability” practically useless as a demarcation criterion. To see why, consider a paradigmatic example of pseudoscience: phrenology, a 19th-century pseudoscience that purported to explain and predict personality traits by the shape of a person’s skull. Is this idea falsifiable? Well, maybe not by itself; but then again, neither are the best theories of real science! So, the phrenologist can simply claim that his theory will yield falsifiable predictions when conjoined with appropriate auxiliary hypotheses.

It’s easy to make up auxiliary hypotheses in order to derive falsifiable predictions from almost any pseudoscientific theory. For example, suppose the phrenologist claims that a certain skull shape indicates a greedy personality. That claim would be difficult to test on its own, but if he adds an auxiliary hypothesis specifying what sorts of behaviors we can expect from greedy people, it may be possible to test whether the skull shape in question is statistically correlated with the specified behaviors. To do so would be to treat the phrenologist’s claim as a genuine scientific hypothesis, albeit one that will surely fail the empirical test.

As this example illustrates, falsifiability often is a matter of how scientists treat or handle their hypotheses, rather than an intrinsic property of the hypotheses themselves. In some of his writings, Popper conceded this point. He acknowledged that falsifiability may be a function of how hypotheses are handled: to handle a hypothesis scientifically is to try to refute it. Perhaps, then, a line of demarcation between science and pseudoscience might be drawn by focusing on scientific behavior. The more strenuously one tries to refute one’s own hypothesis, the more scientific one’s behavior. The suggestion that hypotheses can be handled more scientifically or less scientifically is an important idea, and we’ll return to it in the section on scientific methods later in this chapter.

Unfortunately, however, this way of characterizing scientific behavior still won’t solve the demarcation problem, for several reasons. First, the effort expended to test and potentially refute one’s own hypotheses is a matter of degree, not an all-or-nothing binary property. If the distinction between science and pseudoscience is only a matter of degree, though, there can be no sharp line of demarcation: any such line drawn on a continuous spectrum would be arbitrary. Second, even if we were to draw such an arbitrary line somewhere on the spectrum of behaviors, this demarcation criterion is practically useless unless we have some way to assess or measure the degree to which a putatively scientific community is actively “trying” to refute their hypotheses. Third, and most importantly, the specified behavior is neither necessary nor sufficient for science. Trying to falsify one’s own hypothesis is not sufficient for science, obviously, since research in non-scientific disciplines (philosophy, history, mathematics, etc.) frequently involves strenuous efforts to find fault in one’s own hypotheses. It isn’t necessary for science either, apparently, because scientific research doesn’t always exemplify such laudable behavior. In point of fact, it doesn’t appear to be the case—as a sociological observation—that genuine scientists and scientific communities consistently behave in the way Popper recommends. Quite often, real-life scientists expend much effort seeking confirmatory evidence for their favored hypotheses and display relatively little enthusiasm for disconfirming evidence.

Widely-accepted judgments about what to call “science” and what to label “non-science” or “pseudoscience” frequently have little to do with falsifiability, whether construed as an intrinsic property of hypotheses or as a way of handling hypotheses. Typically, I suggest, such labels rest on judgments about the plausibility of the hypotheses in question. Phrenology isn’t science, not because it can’t yield falsifiable predictions, nor because its practitioners behaved badly, but because the shape of a person’s skull just isn’t a plausible explanation for the intricacies of human personalities and character traits. To avoid wasting our time, money, and effort investigating such hokey ideas, we must rely on our assumptions or presuppositions about what sorts of hypotheses are plausible. Our presuppositions and biases may—indeed, must—come into play when assessing which theories and research projects are worth taking seriously. Scientists make these subjective judgments all the time, and the collective judgments of the scientific community result in a vague and gradually evolving consensus about what deserves the label “science” and what does not.